10 Questions To Ask As The World Moves To Zero-Click Search

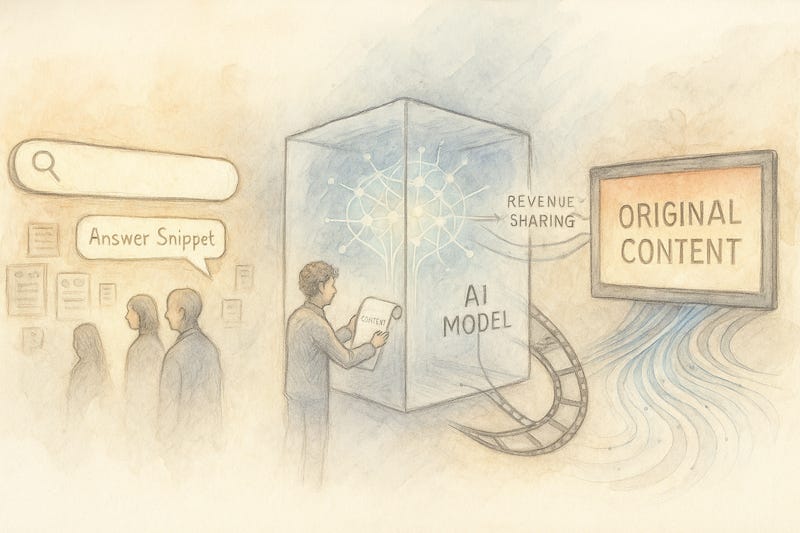

What will the new AI-native internet look like?

Welcome to Infinite Curiosity, a newsletter that explores the intersection of Artificial Intelligence and Startups. Tech enthusiasts across 200 countries have been reading what I write. Subscribe to this newsletter for free to directly receive it in your inbox:

According to a recent report by Bain and Dynata, 80% of users now rely on zero-click results for at least 40% of their queries. When I think about the new AI-native internet, here are 10 questions that came to mind:

1. When search engines give you the answer upfront, why would anyone still click through to a website?

Zero-click snippets satisfy “quick fact” needs. But they rarely cover edge cases, local context, or deep explanations. When the answer matters (e.g. making a purchase, trusting medical advice, citing a source), users still crave primary pages for authority and nuance.

Publishers that package depth, interactive tools, or community discussions will keep earning clicks because AI summaries can’t replicate experiential detail. In practice, zero-click raises the bar. The thin listicles will disappear and the rich explainers will thrive. So clicks won’t vanish, they’ll concentrate around content that adds value beyond a paragraph-length summary.

2. If website owners stop getting views, what new incentives will keep them publishing?

The old incentive stack was centered around ad revenue, affiliate links, and traffic. And this erodes when impressions disappear. In its place, we’ll see direct monetization (newsletters, memberships, paid APIs) and reputation building for off-platform opportunities (jobs, speaking gigs). And perhaps more publishing by individuals who value influence over income.

Governments and NGOs may subsidize verified knowledge to fight misinformation. Niche communities can pool micro-payments to fund specialized sites e.g. Patreon for reference material. In short, economics won’t die. They’ll shift from page views to relationship capital and direct support.

3. Will zero-click search turn the open web into a quiet library of unread pages?

If extraction outweighs visitation, then the long tail of hobbyist pages will go away. Yet history shows that every platform shift (RSS readers, social feeds, mobile apps) kills some surface content while seeding new formats.

So we can expect fewer disposable posts but more durable/evergreen resources optimized for AI model ingestion. Think GitHub-style docs or structured data stores that welcome crawlers. The web won’t go silent. It will just sound more like a reference archive.

4. Should writers start posting straight to models like OpenAI instead of personal blogs?

Direct submission could guarantee distribution inside AI answers and even earn royalties someday. This mirrors how musicians upload to Spotify.

But skipping your own site forfeits first-party data, reader emails, and design control. A hybrid path makes sense i.e. publish on your domain, expose a clean “LLM feed” (Markdown, RSS-plus-metadata), and opt-in to model repositories.

This way you serve both humans and AIs without being locked in. Until revenue-share terms mature, keeping ownership of your canonical copy is practical insurance.

5. Who owns and profits from the content once it’s ingested by an LLM?

Copyright still belongs to the original author unless they’ve licensed it away. Ingestion doesn’t erase rights. But the legal gray zone is “use”. Summaries and transformations may qualify as fair use, but extensive quotation or style cloning may not.

Courts are converging on a middle path where training is likely permissible but output reuse of copyrighted chunks is not. Profit will hinge on contracts. Creators who whitelist their work for training can demand revenue splits or lump-sum fees. Think of it as syndication to a new algorithmic publisher.

6. Could a revenue-sharing model (like X or YouTube) emerge for AI answers?

Yes. And early prototypes already exist e.g. Perplexity’s program to share revenue with creators and Google’s AI-powered search ads experiment with attribution-based payouts.

The mechanics resemble streaming royalties. The AI models log which sources they draw from and allocate a slice of revenue proportionally. Key hurdles are attribution accuracy (token-level tracing) and preventing spammy content farms. Once solved, expect a structure where high-trust sources earn a premium just like how YouTube favors watch-time and retention today.

7. As models improve, will we still care about human-authored nuance?

Humans prize voice and lived experience. These are dimensions that current models can approximate but don’t actually feel themselves. Think of restaurant reviews for a second. The aggregated sentiment is handy, yet a single vivid story can sway a decision.

Consider domains like research, investigative journalism, and creative nonfiction. The credibility in these domains still stems from a named expert willing to stake reputation. AI will draft, compress, and translate. But audiences will look for bylines as authority markers. Nuance shifts from raw wording to the author’s unique lens and accountability.

8. What happens to discovery and serendipity when AI funnels everyone to the same “best” answer?

If ranking algorithms are left unchecked, they converge on monoculture that’s efficient but dull. Counter-measures include explicit randomness knobs (“surprise me”), multi-angle panels, and provenance filters that let users pivot across time, region, or ideology.

Product teams already embed diversity penalties so the top five results aren’t clones. In the long term, AI can increase serendipity by surfacing obscure yet relevant sources that SEO never favored.

9. Using Netflix as a guide, when might OpenAI move from aggregating the web to funding original content itself?

Netflix waited until streaming had scale (~30M subscribers) before pouring billions into original content. OpenAI might follow a similar adoption curve. Once ChatGPT reaches daily utility for a certain large number of users, the marginal returns on exclusive “AI-first” knowledge justify the spend.

We can expect pilot programs within two years e.g. sponsored expert explainers, proprietary technical manuals, interactive courses. The originals serve two goals (i) differentiation (ii) data that rivals can’t scrape. This mirrors Netflix’s bid to escape license renegotiations.

10. Who will end up owning the AI-native transition?

Ownership will be plural. Foundation model labs control the pipes, cloud giants supply the infrastructure, chip makers will supply the GPUs, and creators hold the raw fuel of fresh ideas.

Regulatory bodies will insist on transparency and fair-use guardrails, forcing shared governance. The likely equilibrium would be a tripartite system where platforms run the engines, creators license content through opt-in frameworks, and users pay (directly or via ads) for synthesized knowledge. History’s lesson is clear. Revolutions redistribute power, but they rarely centralize it forever.

If you're a founder or an investor who has been thinking about this, I'd love to hear from you.

If you are getting value from this newsletter, consider subscribing for free and sharing it with 1 friend who’s curious about AI: