What Should An AI-First Programming Language Look Like?

If we were to design a new programming language just for AI, what do we want from it?

Welcome to Infinite Curiosity, a newsletter that explores the intersection of Artificial Intelligence and Startups. Tech enthusiasts across 200 countries have been reading what I write. Subscribe to this newsletter for free to directly receive it in your inbox:

I recently came across the following question: If we were to design a new programming language just for AI, what should it look like? So I decided to tackle it in this post.

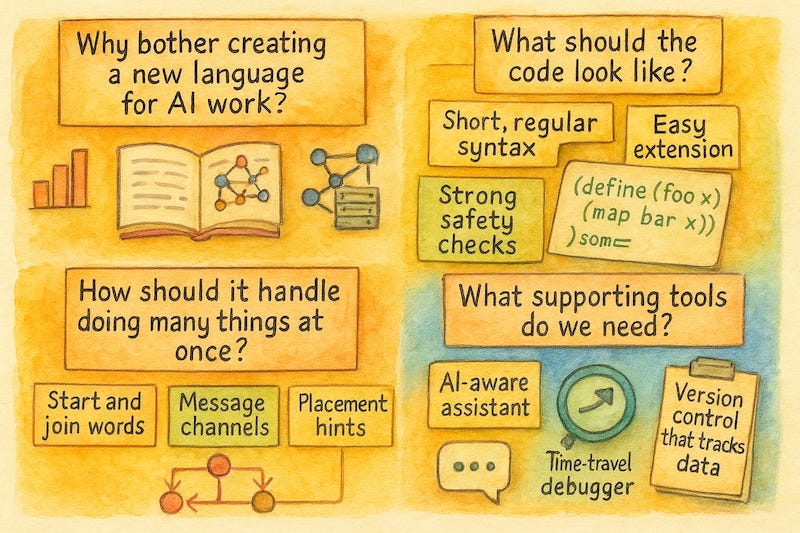

Why bother creating a new language for AI work?

Most current languages were designed decades ago. They were meant for writing step‑by‑step commands that a single computer follows. AI work is different. You juggle giant data sets, train models that change over time, and run code on whole clusters of machines (as opposed to just one laptop).

Trying to force these new tasks into old languages leads to piles of add‑ons, work‑arounds, and copy‑and‑paste scripts. A fresh language built with AI in mind can bake in the things we keep bolting on i.e. handling big data, working smoothly with GPUs, and letting code and models grow side by side. This allows developers spend less time wiring pieces together and more time solving real problems.

What should the code look like in this new language?

A good AI‑first language keeps the surface simple and flexible. Here’s what it means:

Short, regular syntax: We can think of Lisp, Scheme, or Clojure as examples. In these languages, code is made of neat repeating patterns instead of many special cases. This makes it easy for both people and AI helpers to read and reshape programs.

Strong safety checks: Borrow ideas from languages like Rust or Haskell that catch mistakes (e.g. mixing up data shapes) before the program even runs.

Easy extension: Let’s say a new hardware chip or mathematical optimization shows up. If this happens, you just add a small definition and the whole language understands it instead of waiting for an update to a giant library.

With these traits, the language feels light on the surface yet solid underneath. This is simple enough for quick experiments and robust enough for production.

How should it handle doing many things at once?

Training a modern AI model often means splitting work across dozens of GPUs or servers. Instead of hiding it behind mysterious settings, the language should speak about it directly:

Clear “start” and “join” words for kicking off parallel tasks so you always know when work begins and ends.

Message channels that let different tasks pass data without tripping over each other.

Plain placement hints like “run this part on GPU” that the compiler turns into the right low‑level commands.

Languages such as Go (with its lightweight threads) and Erlang (built for huge phone networks) show that explicit, human‑readable concurrency can be both safe and approachable. Bringing those lessons to AI keeps complex training runs from becoming black boxes.

What supporting tools do we need?

A language is only half the story. The other half is its toolbox:

An AI‑aware assistant that can finish functions, suggest fixes, and explain errors because the code’s structure is simple and consistent.

A time‑travel debugger that lets you jump back to any training step and ask in plain english “Why did the accuracy drop here?”

Version control that tracks data and model files along with code, so you can always rebuild an old experiment.

If you take them together, these tools turn messy notebooks and ad‑hoc scripts into a nice repeatable workflow.

Where do we go from here?

An AI‑first programming language should be clean on the surface and strict enough to catch errors early. And it should be honest about the fact that AI work runs on many machines at once.

Languages like Lisp, Scheme, and Clojure prove that a small regular syntax makes powerful tooling possible. Rust and Haskell show how strong checks keep large systems dependable. Go and Erlang show us that clear words for parallel work beat magic in the background. If you blend these ideas, then we can get a language that lets human developers and machine helpers work together smoothly. It’s a better fit for the AI age than the tools we have right now.

If you're a founder or an investor who has been thinking about this, I'd love to hear from you.

If you are getting value from this newsletter, consider subscribing for free and sharing it with 1 friend who’s curious about AI:

This is something I also have been thinking about, I asked AI and thought it would be a fun experiment to see how far I could claude code to take it. You can see the path we have gone down at https://github.com/beamsjr/FluentAI . Thanks for this article, Im glad I am not the only one thinking about this.